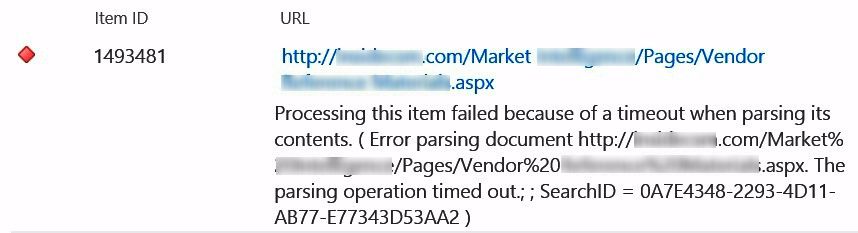

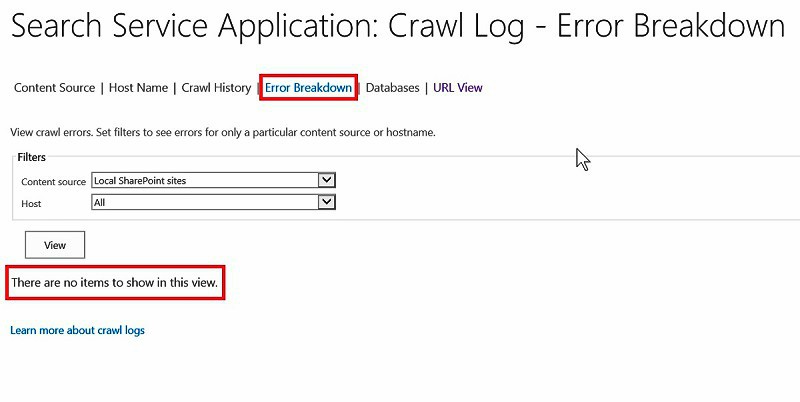

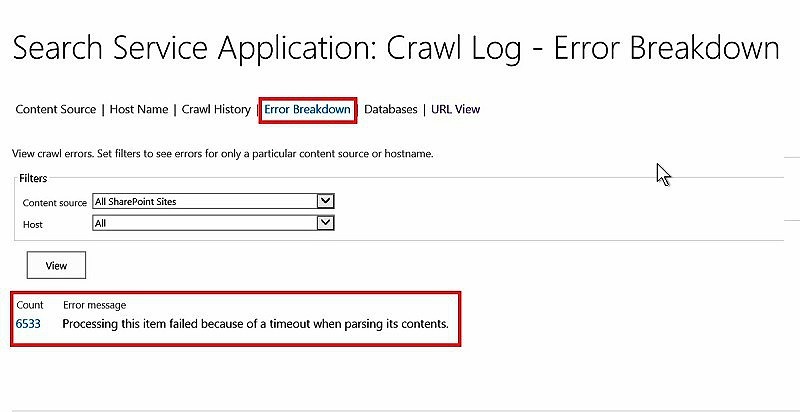

A few days ago, suddenly in one of the SharePoint farms which my team maintains, after running fine for few months, it started logging the error message “SharePoint Crawl Error- Processing this item failed because of a timeout when parsing its contents” for almost all the content the crawler was trying to index.

Background

It was a medium size farm, with 2 front end servers, 2 application servers with 2 database servers configured with always on availability groups. The search crawl was schedule to with “Continuous Crawl” with “Incremental Crawl” every 4 hours. Initially we thought it might be a temporary issue and would get resolved on it’s own during subsequent crawls, when the crawler runs during off-peak hours. We waited for a few days, but that never happened!

Initial Findings

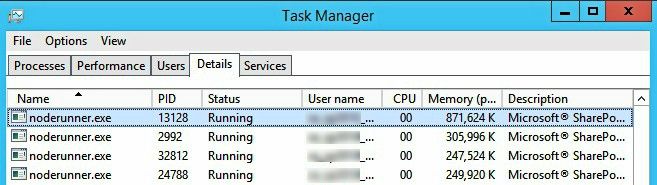

We started exploring a bit, first thing we found that whenever the crawl runs, in both the application servers the CPU utilization remained at about 20%-30%, but memory utilization reached 99%!

Further, most of the memory utilization was by processes called “noderunner.exe”. Some googling suggested the following changes in the noderunner.exe config file –

Go to C:\Program Files\Microsoft Office Servers\15.0\Search\Runtime\1.0\noderunner.exe.config and

Update <nodeRunnerSettings memoryLimitMegabytes=”0″ /> to something like <nodeRunnerSettings memoryLimitMegabytes=”250″ />. This is the configuration to limit NodeRunner process memory usage.

Well, personally I didn’t like the idea and did’t want to play with any configuration file which comes packaged with SharePoint, so I didn’t go for that.

Further Findings

Little bit more googling and found this old blog post in technet. It was meant for Sp 2013 RTM and we were dealing with SP 2016 with feature pack 1, so thought not so relevant. Anyway, half of the solution mentioned in this article was same which I had already decided not to move ahead with.

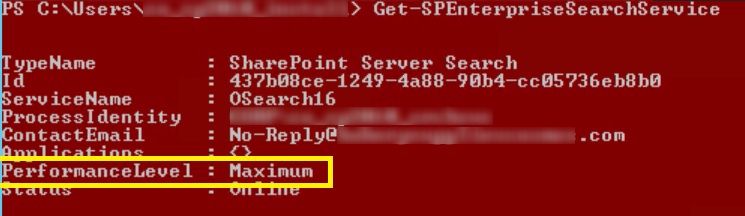

But, other half was interesting, it talked about changing Indexer performance setting in SharePoint using Set-SPEnterpriseSearchService PowerShell command. Now, that sounded interesting, so I explored that further and looked into the related technet article.

A quick read of the Performance Level parameter revealed that it specifies the relative number of threads for the crawl component performance.

The type must be one of the following values: Reduced, PartlyReduced, or Maximum. The default value is Maximum.

Maximum: Total number of threads = 32 times the number of processors, Max Threads/host = 8 plus the number of processors. Threads are assigned Normal priority.

Partly Reduced: Total number of threads = 16 times the number of processors , Max Threads/host = 8 plus the number of processors. Threads are assigned Below Normal priority.

Reduced: Total number of threads = number of processors, Max Threads/host = number of processors.

So, I went ahead and checked the current configuration in the SharePoint farm and as expected, it was found to be configured as Maximum.

Obviously, more the number of threads, more number of noderunner.exe instances resulting in more memory usage.

Solution

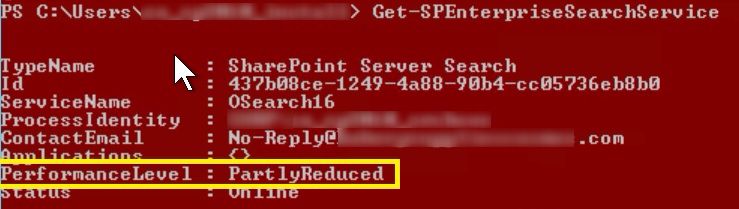

So, easy fix, just change it to PartlyReduced first and see if that solves the issue, so I went ahead and ran the PowerShell command in the SharePoint Management Shell –

[code lang=”js”]

Set-SPEnterpriseSearchService -PerformanceLevel PartlyReduced

Restart-Service SPSearchHostController

[/code]

Confirm that the Performance Level configuration has changed by running the following command

[code lang=”js”]Get-SPEnterpriseSearchService [/code]

This solved the problem in our SharePoint environment. If you still get the error in a few files, you can further reduce the number of threads by using

[code lang=”js”]

Set-SPEnterpriseSearchService -PerformanceLevel Reduced

Restart-Service SPSearchHostController

[/code]

You don’t need to update the noderunner.exe config file manually. Less numbers of threads will take care of the memory utilization.

Final Touch

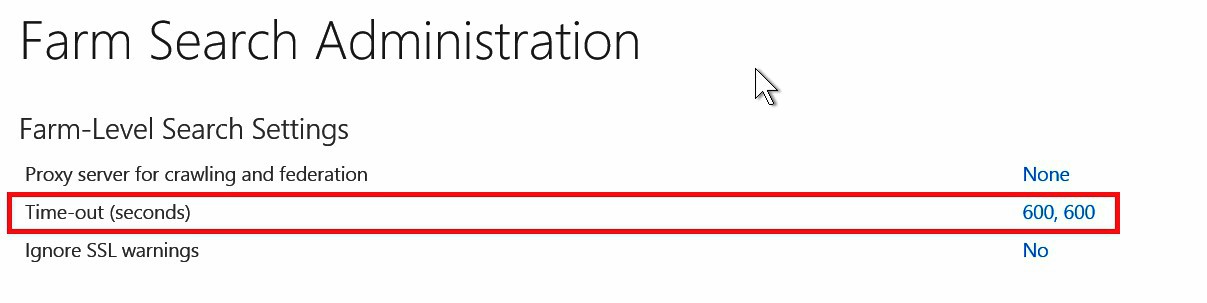

As a final touch, I increased the timeout value under Farm Search Administration to 600 from 60

And that’s it. All good now, all items getting crawled successfully and memory utilization in control too… happy days 🙂

Enjoy,

Anupam

1 comment

Hi Anupam ,

I am getting below error – Processing this item failed because the parser server ran out of memory. ( Error parsing document ssIC:) Document failed to be processed. It probably crashed the server.SearchID:…..

I have tried these options but nothing is working in my case .Plus the server has already more than enough memory. Let me know what you think can be the cause of issue.